摘要

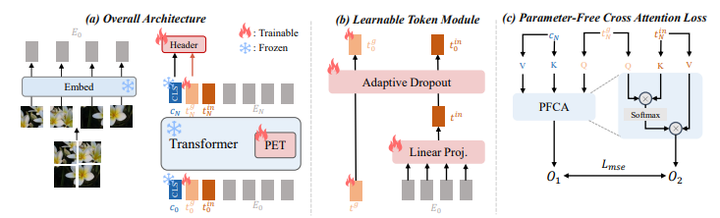

Existing fne-tuning paradigms are predominantly characterized by Full Parameter Tuning (FPT) and Parameter-Effcient Tuning (PET). FPT fne-tunes all parameters of a pre-trained model on downstream tasks, whereas PET freezes the pretrained model and employs only a minimal number of learnable parameters for fne-tuning. However, both approaches face issues of overftting, especially in scenarios where downstream samples are limited. This issue has been thoroughly explored in FPT, but less so in PET. To this end, this paper investigates overftting in PET, representing a pioneering study in the feld. Specifcally, across 19 image classifcation datasets, we employ three classic PET methods (e.g., VPT, Adapter/Adaptformer, and LoRA) and explore various regularization techniques to mitigate overftting. Regrettably, the results suggest that existing regularization techniques are incompatible with the PET process and may even lead to performance degradation. Consequently, we introduce a new framework named TTE (Two Tokens are Enough), which effectively alleviates overftting in PET through a novel constraint function based on the learnable tokens. Experiments conducted on 24 datasets across image and few-shot classifcation tasks demonstrate that our fne-tuning framework not only mitigates overftting but also signifcantly enhances PET’s performance. Notably, our TTE framework surpasses the highest-performing FPT framework (DR-Tune), utilizing signifcantly fewer parameters (0.15M vs. 85.84M) and achieving an improvement of 1%.